How YouTube’s algorithm ends up promoting far right content

YouTube’s algorithm is propelling far-right content into unassuming viewers’ lives.

February 20, 2021

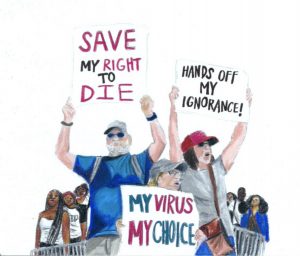

On Jan. 6, 2021, a group of armed insurrectionists laid siege to the Capitol. They believed a conspiracy theory that the 2020 election was stolen from former President Donald Trump and stormed the Capitol in order to prevent the certification of the Electoral College. How did we reach the point in which one out of every three voters believe that the cornerstone of our nation was rigged?

Many of those who were a part of the insurrection on the sixth were radicalized through social media and online forums which not only spewed lies regarding voter fraud, but also claims of a so called “Deep State” in which top Democratic officials such as speaker of the house Nancy Pelosi and former Secretary of State Hillary Clinton ran a satanic cult which Trump was supposedly trying to oust in the public. But many of the voices who would come to promote and spread these beliefs didn’t start on 4Chan or Facebook, but rather YouTube.

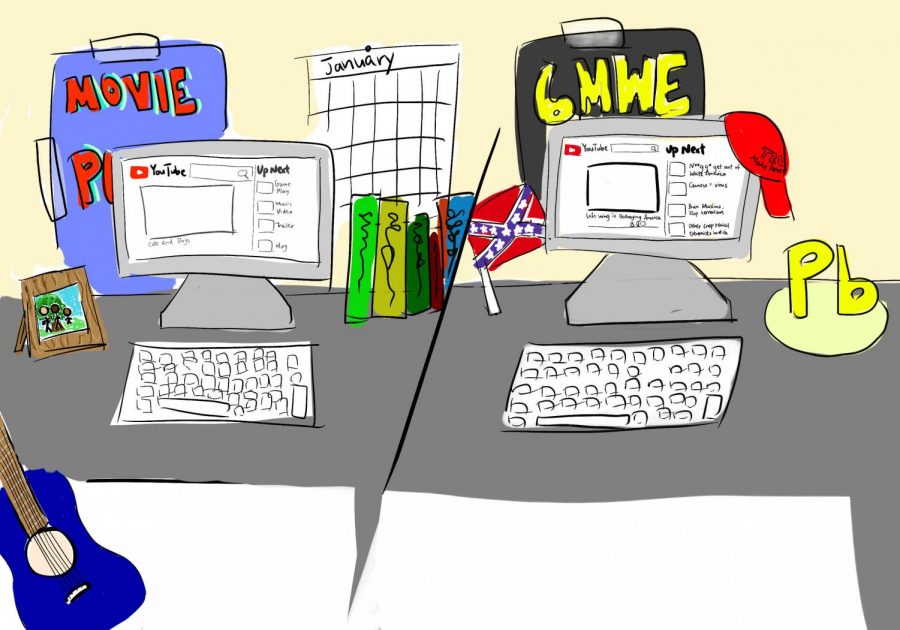

On the surface YouTube may not seem like the best place to spread conspiracies, as making a video requires more effort than sending a tweet and the site doesn’t have built in features to easily share videos or comments, however, the YouTube algorithm has allowed people to fall into a far right “rabbit hole”. Essentially what this means is that over time, clicks on videos featuring right-wing YouTubers can send someone snowballing down a slope in which it is hard to return from.

The initial channels and videos may be mildly right wing or on the surface not even political to begin with, but as they watch more and more, the algorithm will recommend more and more related videos and channels, all slowly leaning further right.

For example, Joe Rogan, a podcaster who has a channel with over 10 million subscribers, has fairly mixed political beliefs endorsing Sen. Bernie Sanders in the 2020 Democratic primary but ultimately voting for Donald Trump in the 2020 presidential election.

Rogan has hosted both people on the left such as the aforementioned Bernie Sanders, but has also given a platform to conspiracy theorist Alex Jones. In addition to these interviews recommending conspiracy theorists like Jones or Proud Boys founder Gavin McGinness, they also show their colleagues who unlike Jones at first appear to be simply offering self help.

These videos often target young white men who are going through struggles such as depression and often claim that the sources of their problems come from cultural issues such as modern feminism, Black Lives Matter andLGBTQ+ rights. Some of the channels named in a study showing how YouTube spreads far right beliefs include former University of Toronto professor Jordan Peterson who has over three million subscribers or former Daily Show intern Dave Rubin who has 1.5 million subscribers, though there is likely crossover between the two channels.

They may identify as conventionally right wing however not only does their content result in the YouTube algorithm linking to other conservative content but further right wing content as well. One such figure that often gets linked as a result is Neo Nazi and Alt Right figure Richard Spencer who was an active member of the “Unite the Right” rally in Charletsville.

So what is YouTube doing about it? Arguably, not enough. YouTube has had events to promote social justice such as promoting LGBTQ+ creating during pride month. The platform has taken steps to crack down on conspiracy theories, by tweaking the algorithms that recommend far right YouTube content and making them hard to find, however this doesn’t change the fact that those videos are still up and the theorists are evading platform bans.

YouTube has also failed to enact on their own violations of service, such as the story of Steven Crowder, who sent homphobic threats to fellow content creator Carlos Maza.

Youtube only acted on it when they received online backlash from people all over social media demonizing Crowder, and later remonetized Crowder after a little over a year. YouTube has to enforce its terms of service and act on those who violate it and if they don’t, it’s important that both content creators and viewers make sure that if someone uses the platform to threaten another person to pressure YouTube into doing its job.

Since the insurrection on Jan. 6, YouTube will now suspend channels claiming election fraud and has banned Steve Bannon’s channel but the underlying issue still exists.

YouTube’s algorithm may seem unrelated to the rise of the far right, however the system has inadvertently produced a breeding ground to promote undemocratic conspiracy theories beyond even accusations of a fraudulent election. YouTube may promote social justice but unless they reform the underlying system the site will continue to be used as a way to radicalize people into the far right and result in more tragedies like Charlettesville on Jan. 6.